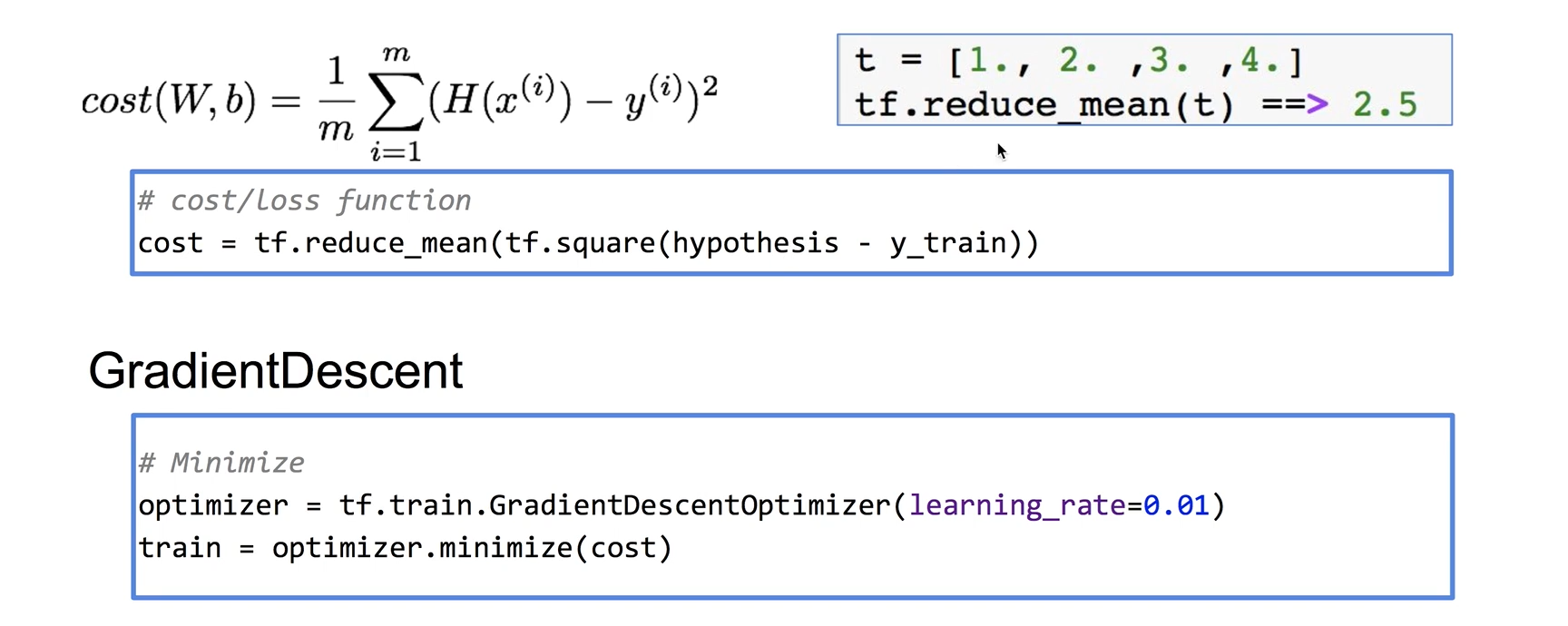

1. Hypothesis and cost function

학습 한다는 것이 위 cost 값을 최소화 하는 것이다.

2. Build graph using TF operations

- x_train = [1, 2, 3], y_train = [1, 2, 3]은 x, y값을 1, 2, 3으로 주어졌을 때 학습해보라는 말이다.(당연히, x=4일 때 y=5일 것이다.)

- W, b를 정의해야 하는데 Variable로 정의했다. 이때, Variable은 변수인데 tensorflow가 자체적으로 변경시키는 값이다. 또한, trainable Variable이라고 한다.

- random_normal은 랜덤한 값을 주게 되는데 shape이 [1]로 값이 1개인 1차원 배열이다.

- hypothesis = x_train * w + 라 할 수 있다. hypothesis는 Node이다.

- reduce_mean(t)는 t에 있는 값의 평균을 구한다.

- GradientDescent

const를 최소화 하는 방법이 여러가지 있는데 GradientDescentOptimizer를 사용한다.

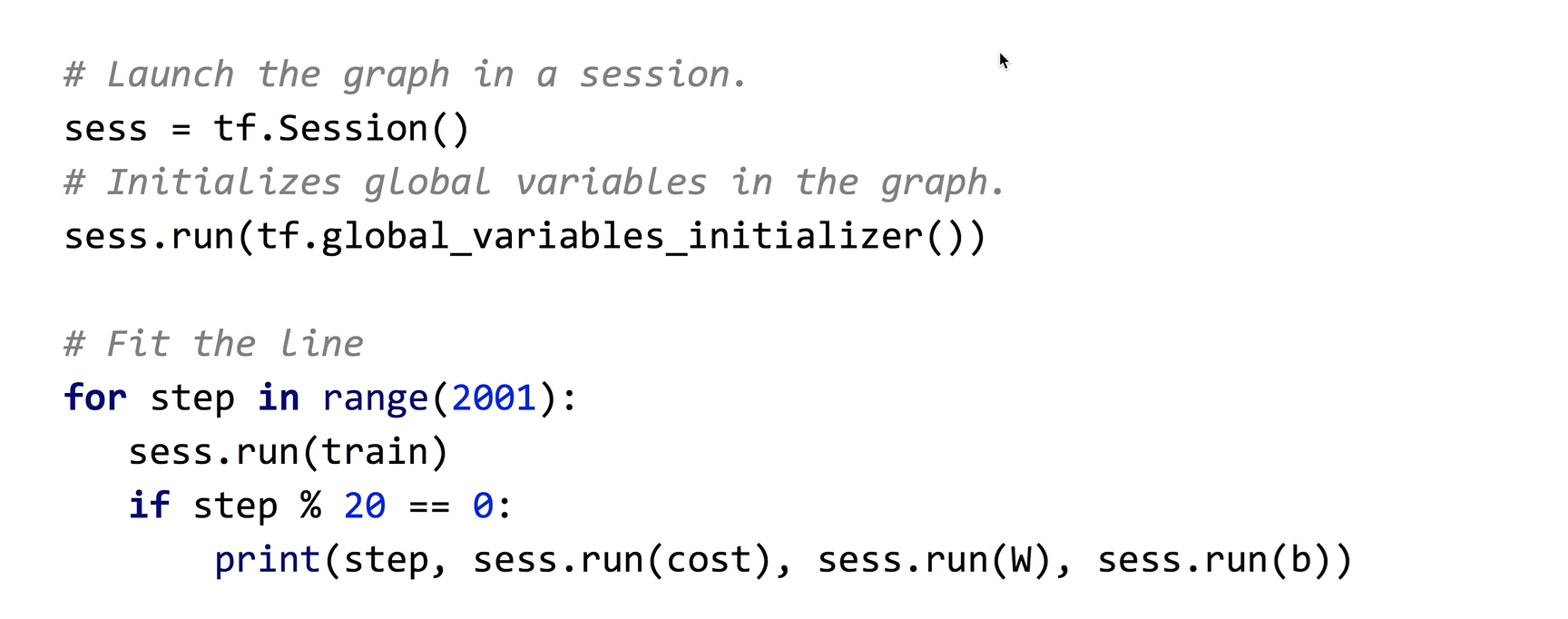

3. Run/update graph and get result

* tensorflow2.0에서 Session( ) 사용하지 않음.

- sess.run(tf.global_variables_initializer())는 W, b를 초기화 하는 과정이다.

- for문으로 2000번으로 train을 한다.

- train된 값을 print하는데 2000번 출력하기 쉽지 않으므로 20번에 한 번 꼴로 const, W, b를 출력한다.

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '1'

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

# X and Y data

x_train = [1, 2, 3]

y_train = [1, 2, 3]

# Try to find values for W and b to compute y_data = x_data * W + b

# We know that W should be 1 and b should be 0

# But let TensorFlow figure it out

W = tf.Variable(tf.random_normal([1]), name="weight")

b = tf.Variable(tf.random_normal([1]), name="bias")

# Our hypothesis XW+b

hypothesis = x_train * W + b

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - y_train))

# optimizer

train = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(cost)

# Launch the graph in a session.

with tf.Session() as sess:

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

# Fit the line

for step in range(2001):

_, cost_val, W_val, b_val = sess.run([train, cost, W, b])

if step % 20 == 0:

print(step, cost_val, W_val, b_val)-> x_train, y_train 값만 보면 y = x임을 유추할 수 있다.

https://github.com/hunkim/DeepLearningZeroToAll/blob/master/lab-02-1-linear_regression.py

0 4.570045 [-0.37157094] [1.1860353]

20 0.3327738 [0.30366653] [1.3989786]

40 0.2676154 [0.39454955] [1.3588154]

60 0.24273884 [0.4285412] [1.2973922]

80 0.22045638 [0.45592505] [1.2366514]

100 0.20022206 [0.48154473] [1.1785556]

120 0.18184495 [0.5059149] [1.1231701]

140 0.1651545 [0.52913547] [1.0703855]

160 0.14999594 [0.55126446] [1.0200813]

180 0.13622865 [0.5723535] [0.972141]

200 0.12372508 [0.5924513] [0.926454]

220 0.11236911 [0.6116045] [0.8829141]

240 0.10205544 [0.62985766] [0.84142053]

260 0.09268838 [0.6472529] [0.80187684]

280 0.08418107 [0.66383076] [0.7641916]

300 0.07645459 [0.67962956] [0.7282774]

320 0.0694373 [0.69468576] [0.694051]

340 0.063064046 [0.70903444] [0.66143316]

360 0.0572758 [0.72270876] [0.6303482]

380 0.052018773 [0.7357404] [0.6007241]

400 0.04724425 [0.74815977] [0.5724921]

420 0.042908 [0.7599953] [0.5455871]

440 0.03896971 [0.7712746] [0.5199465]

460 0.035392944 [0.78202385] [0.49551103]

480 0.03214443 [0.7922679] [0.47222382]

500 0.029194077 [0.8020306] [0.450031]

520 0.026514536 [0.8113344] [0.4288813]

540 0.024080927 [0.820201] [0.4087254]

560 0.021870695 [0.82865083] [0.38951686]

580 0.019863313 [0.8367038] [0.371211]

600 0.018040175 [0.844378] [0.35376543]

620 0.016384369 [0.8516916] [0.33713976]

640 0.014880556 [0.85866153] [0.32129547]

660 0.013514764 [0.86530393] [0.30619586]

680 0.012274325 [0.8716342] [0.29180577]

700 0.011147744 [0.87766695] [0.27809194]

720 0.010124549 [0.88341606] [0.2650226]

740 0.009195277 [0.8888951] [0.2525675]

760 0.008351304 [0.89411664] [0.24069777]

780 0.0075847786 [0.89909273] [0.22938587]

800 0.006888623 [0.90383506] [0.21860558]

820 0.0062563564 [0.9083544] [0.20833193]

840 0.0056821294 [0.91266143] [0.19854113]

860 0.0051605976 [0.916766] [0.1892104]

880 0.004686937 [0.9206778] [0.18031819]

900 0.0042567495 [0.9244056] [0.17184387]

920 0.0038660485 [0.92795825] [0.16376787]

940 0.0035112074 [0.931344] [0.15607134]

960 0.0031889342 [0.93457055] [0.14873655]

980 0.002896243 [0.93764544] [0.1417465]

1000 0.0026304133 [0.9405759] [0.13508493]

1020 0.002388979 [0.9433686] [0.12873644]

1040 0.00216971 [0.9460301] [0.12268628]

1060 0.0019705684 [0.9485664] [0.11692051]

1080 0.0017896983 [0.9509837] [0.11142563]

1100 0.0016254311 [0.95328724] [0.10618903]

1120 0.0014762423 [0.9554826] [0.10119853]

1140 0.0013407505 [0.9575747] [0.09644257]

1160 0.0012176932 [0.95956856] [0.09191015]

1180 0.0011059236 [0.9614687] [0.08759069]

1200 0.0010044194 [0.96327955] [0.08347426]

1220 0.0009122326 [0.9650052] [0.07955129]

1240 0.0008285015 [0.96664983] [0.07581268]

1260 0.00075246085 [0.96821713] [0.07224979]

1280 0.0006833964 [0.9697109] [0.06885435]

1300 0.00062066916 [0.9711344] [0.06561839]

1320 0.0005637013 [0.9724909] [0.06253456]

1340 0.0005119643 [0.97378373] [0.05959568]

1360 0.00046497353 [0.9750158] [0.05679492]

1380 0.0004222969 [0.97619003] [0.05412576]

1400 0.00038353706 [0.977309] [0.05158203]

1420 0.00034833435 [0.9783753] [0.04915787]

1440 0.0003163648 [0.9793915] [0.04684767]

1460 0.0002873258 [0.9803602] [0.04464605]

1480 0.00026095452 [0.9812832] [0.0425478]

1500 0.0002370026 [0.9821628] [0.0405482]

1520 0.00021524967 [0.9830011] [0.03864257]

1540 0.00019549165 [0.9838] [0.03682648]

1560 0.00017755032 [0.9845613] [0.03509577]

1580 0.00016125313 [0.9852869] [0.03344638]

1600 0.00014645225 [0.98597836] [0.03187453]

1620 0.00013301123 [0.9866373] [0.03037656]

1640 0.000120802724 [0.9872653] [0.02894899]

1660 0.00010971467 [0.9878638] [0.02758849]

1680 9.964479e-05 [0.98843414] [0.02629196]

1700 9.0500165e-05 [0.9889777] [0.02505637]

1720 8.219385e-05 [0.9894957] [0.02387884]

1740 7.464935e-05 [0.98998946] [0.02275658]

1760 6.779731e-05 [0.9904599] [0.02168702]

1780 6.15738e-05 [0.9909082] [0.02066777]

1800 5.5922355e-05 [0.9913355] [0.01969645]

1820 5.078956e-05 [0.99174273] [0.01877078]

1840 4.6127447e-05 [0.99213076] [0.01788862]

1860 4.189448e-05 [0.9925006] [0.01704792]

1880 3.8048653e-05 [0.99285305] [0.01624673]

1900 3.455651e-05 [0.99318886] [0.01548321]

1920 3.1384752e-05 [0.993509] [0.01475557]

1940 2.8504479e-05 [0.99381405] [0.0140621]

1960 2.5888083e-05 [0.99410474] [0.01340124]

1980 2.3512248e-05 [0.9943818] [0.01277145]

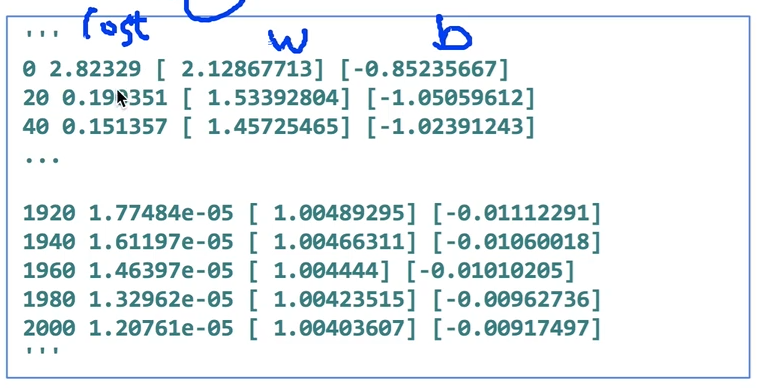

2000 2.1353997e-05 [0.99464583] [0.01217124]값이 늘어날 수록, cost는 굉장히 작은 값에 수렴하고, W는 1 그리고 b는 에 수렴한다.

placeholder를 통해 값을 직접 변경하면서 넣을 수 있다.

- placeholder에서 shape=[None]은 여러개 올 수 있다는 말이다.

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '1'

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

# Try to find values for W and b to compute Y = W * X + b

W = tf.Variable(tf.random_normal([1]), name="weight")

b = tf.Variable(tf.random_normal([1]), name="bias")

# placeholders for a tensor that will be always fed using feed_dict

# See http://stackoverflow.com/questions/36693740/

X = tf.placeholder(tf.float32, shape=[None])

Y = tf.placeholder(tf.float32, shape=[None])

# Our hypothesis is X * W + b

hypothesis = X * W + b

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

# optimizer

train = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(cost)

# Launch the graph in a session.

with tf.Session() as sess:

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

# Fit the line

for step in range(2001):

_, cost_val, W_val, b_val = sess.run(

[train, cost, W, b], feed_dict={X: [1, 2, 3, 4, 5], Y: [2.1, 3.1, 4.1, 5.1, 6.1]}

)

if step % 20 == 0:

print(step, cost_val, W_val, b_val)-> x_train, y_train 값만 보면 y = x+1.5임을 유추할 수 있다.

0 89.63948 [-0.5100383] [-1.2930396]

20 0.540154 [1.4656237] [-0.6164825]

40 0.47013456 [1.4436066] [-0.5017207]

60 0.41057214 [1.4145927] [-0.3968122]

80 0.3585555 [1.3874409] [-0.29878473]

100 0.31312934 [1.3620671] [-0.20717724]

120 0.27345806 [1.3383551] [-0.12156917]

140 0.23881304 [1.316196] [-0.04156766]

160 0.20855728 [1.295488] [0.03319446]

180 0.18213454 [1.2761363] [0.10306045]

200 0.15905948 [1.2580519] [0.1683508]

220 0.13890788 [1.2411518] [0.22936524]

240 0.1213092 [1.2253585] [0.2863838]

260 0.10594027 [1.2105997] [0.33966824]

280 0.09251839 [1.1968073] [0.38946304]

300 0.080796994 [1.1839182] [0.43599662]

320 0.07056058 [1.1718733] [0.47948268]

340 0.061621152 [1.1606172] [0.5201208]

360 0.053814214 [1.1500983] [0.55809754]

380 0.046996363 [1.1402682] [0.59358716]

400 0.041042235 [1.1310819] [0.62675244]

420 0.035842523 [1.1224972] [0.6577458]

440 0.031301547 [1.1144748] [0.68670946]

460 0.027335847 [1.1069778] [0.71377623]

480 0.02387261 [1.0999717] [0.73907024]

500 0.020848121 [1.0934244] [0.7627077]

520 0.018206833 [1.087306] [0.78479725]

540 0.015900169 [1.0815883] [0.8054402]

560 0.01388572 [1.076245] [0.82473123]

580 0.0121265035 [1.0712516] [0.8427587]

600 0.010590159 [1.0665853] [0.8596057]

620 0.009248455 [1.0622245] [0.87534934]

640 0.008076762 [1.0581495] [0.890062]

660 0.0070534833 [1.0543412] [0.9038109]

680 0.006159871 [1.0507823] [0.9166595]

700 0.0053794472 [1.0474566] [0.92866665]

720 0.0046979147 [1.0443486] [0.93988746]

740 0.0041027414 [1.0414442] [0.9503732]

760 0.003582944 [1.03873] [0.96017236]

780 0.003129019 [1.0361936] [0.9693297]

800 0.0027325884 [1.0338231] [0.97788745]

820 0.0023863944 [1.0316081] [0.9858846]

840 0.002084062 [1.029538] [0.9933581]

860 0.0018200235 [1.0276036] [1.0003421]

880 0.0015894463 [1.0257958] [1.0068686]

900 0.001388067 [1.0241065] [1.012968]

920 0.0012122168 [1.0225278] [1.0186676]

940 0.0010586402 [1.0210526] [1.023994]

960 0.0009245202 [1.0196736] [1.0289717]

980 0.0008073845 [1.0183852] [1.0336235]

1000 0.0007050986 [1.017181] [1.0379704]

1020 0.0006157699 [1.016056] [1.0420327]

1040 0.0005377593 [1.0150045] [1.045829]

1060 0.00046963594 [1.0140219] [1.0493765]

1080 0.00041013298 [1.0131036] [1.0526918]

1100 0.000358176 [1.0122454] [1.0557901]

1120 0.00031279214 [1.0114434] [1.0586855]

1140 0.00027316582 [1.010694] [1.0613912]

1160 0.00023855995 [1.0099937] [1.0639198]

1180 0.00020833302 [1.0093391] [1.0662829]

1200 0.000181936 [1.0087274] [1.0684911]

1220 0.0001588808 [1.0081557] [1.0705551]

1240 0.00013875077 [1.0076215] [1.0724835]

1260 0.000121172576 [1.0071225] [1.0742856]

1280 0.0001058207 [1.006656] [1.0759696]

1300 9.2415554e-05 [1.0062201] [1.0775434]

1320 8.0707374e-05 [1.0058128] [1.079014]

1340 7.048451e-05 [1.0054321] [1.0803882]

1360 6.155305e-05 [1.0050764] [1.0816725]

1380 5.37534e-05 [1.0047439] [1.0828729]

1400 4.694568e-05 [1.0044333] [1.0839945]

1420 4.099901e-05 [1.0041429] [1.0850426]

1440 3.5804078e-05 [1.0038717] [1.086022]

1460 3.1269286e-05 [1.0036181] [1.0869374]

1480 2.7306687e-05 [1.0033811] [1.0877929]

1500 2.3847457e-05 [1.0031598] [1.0885924]

1520 2.0825722e-05 [1.0029527] [1.0893395]

1540 1.8190036e-05 [1.0027596] [1.0900371]

1560 1.588315e-05 [1.0025789] [1.0906899]

1580 1.3871327e-05 [1.0024098] [1.0912997]

1600 1.2113875e-05 [1.0022521] [1.0918695]

1620 1.0579322e-05 [1.0021045] [1.0924019]

1640 9.239109e-06 [1.0019667] [1.0928994]

1660 8.068504e-06 [1.001838] [1.0933645]

1680 7.046337e-06 [1.0017176] [1.093799]

1700 6.1539417e-06 [1.001605] [1.094205]

1720 5.3741333e-06 [1.0015] [1.0945846]

1740 4.6937794e-06 [1.0014018] [1.0949391]

1760 4.098376e-06 [1.00131] [1.0952706]

1780 3.5797577e-06 [1.0012243] [1.0955802]

1800 3.126234e-06 [1.0011439] [1.0958697]

1820 2.730156e-06 [1.0010691] [1.0961401]

1840 2.3841878e-06 [1.0009991] [1.096393]

1860 2.0815778e-06 [1.0009336] [1.0966294]

1880 1.8181236e-06 [1.0008725] [1.0968502]

1900 1.5877906e-06 [1.0008154] [1.0970564]

1920 1.3866174e-06 [1.0007619] [1.0972492]

1940 1.210977e-06 [1.000712] [1.0974293]

1960 1.0574473e-06 [1.0006655] [1.0975976]

1980 9.2368873e-07 [1.0006218] [1.0977548]

2000 8.069102e-07 [1.0005811] [1.0979017]값을 위와 같이 줄 때, cost는 굉장히 작은값 W는 1에 수렴하고 b는 1.1에 수렴하는 것을 볼 수 있다.

'Python > ML' 카테고리의 다른 글

| [ML] 2025/07/22, Linear Regression의 cost 최소화의 TensorFlow 구현 (0) | 2025.07.22 |

|---|---|

| [ML] 2025/07/22, Linear Regression의 cost 최소화 알고리즘의 원리 설명 (0) | 2025.07.22 |

| [ML] 2025/07/21, Linear Regression의 Hypothesis 와 cost 설명 (0) | 2025.07.21 |

| [ML] 2025/07/21, TensorFlow의 설치및 기본적인 operations (0) | 2025.07.21 |

| [ML] 2025/07/20, 모두를 위한 딥러닝 강좌 시즌 1 (0) | 2025.07.21 |